Jiayu (Mila) Wang

Contact: milawang [at] cs [dot] wisc [dot] edu

I am Jiayu (pronounciation: “Jee-ah-yü Wahng”), a fourth-year PhD candidate in Computer Sciences at UW-Madison. I am fortunate to be advised by Prof. Aws Albarghouthi and Prof. Fred Sala (Sprocket Lab).

I am passionate about building efficient and intelligent agentic systems. My recent works focus on:

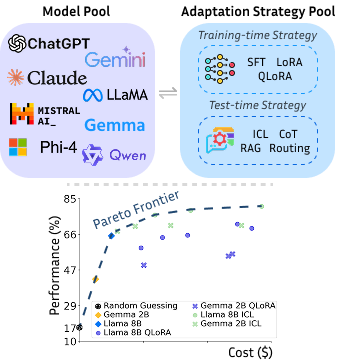

- Data- and compute-efficient adaptation of Large Language Models (LLMs) and Multimodal Large Language Models (MLLMs) (e.g., cost-effective adaptation via augmenting model routing with expanded adaptation strategy pool, model-strategy-tool selection)

- Reasoning in agentic systems (e.g., deep research, dissecting mathematical reasoning under RL, grammar-aligned decoding (logical reasoning), spatial reasoning)

I’m always happy to discuss research, answer questions, or just chat! Feel free to reach out through my socials (see RHS →).

Outside of research, I love playing tennis🎾 and try to get on the court as often as I can—usually 4–5 times a week.

I am currently on the 2025–2026 job market! Feel free to reach out if you think there might be a good fit!

news

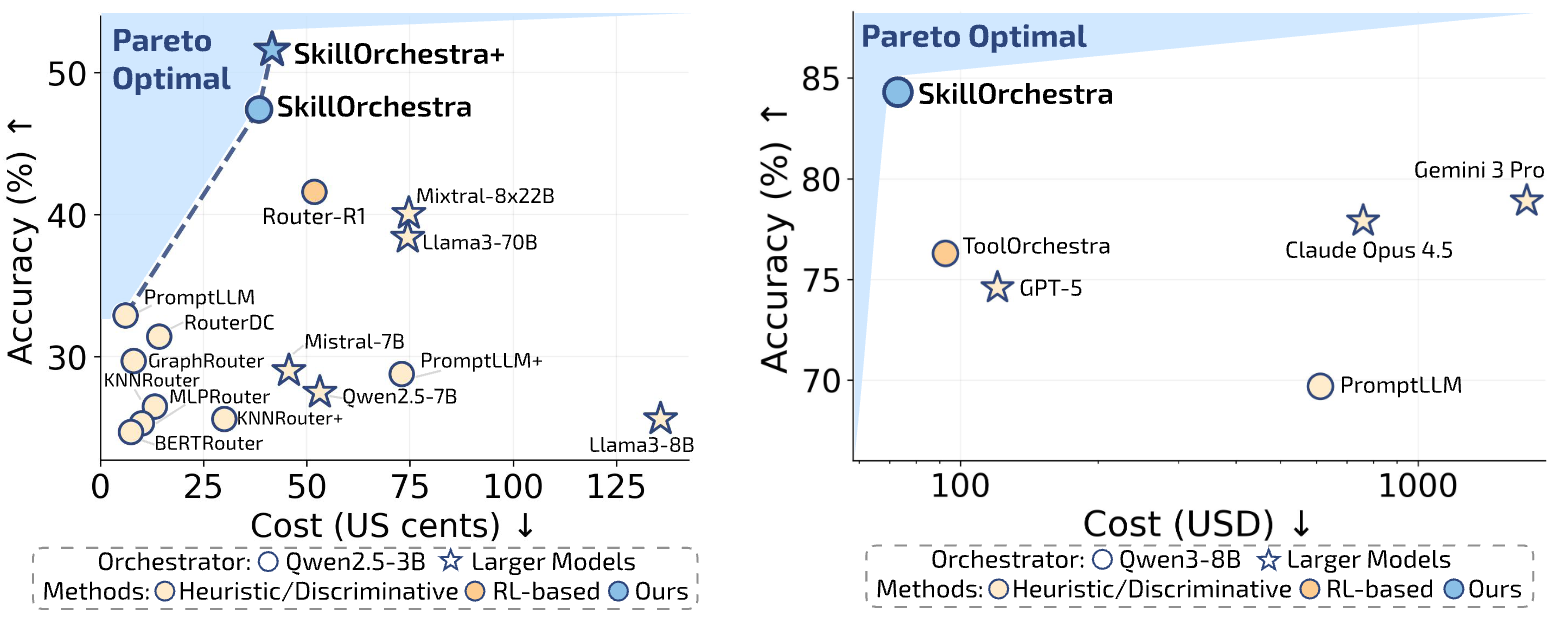

| Feb 24, 2026 | 🚀 SkillOrchestra is now available on arXiv! A framework for skill-aware agent orchestration that learns fine-grained skills from execution experience. It reduces learning costs by 700x vs Router-R1 and 300x vs ToolOrchestra while boosting performance up to 22.5%. |

|---|---|

| Jan 27, 2026 | LiveResearchBench, a live benchmark for deep research and a comprehensive evaluation suite that closely aligns with human judgment across six dimensions, has been accepted to ICLR 2026, check out our paper, code, dataset! 🎉🎉 |

| Sep 18, 2025 | SPARKLE is accepted to NeurIPS 2025, check out our paper, code, dataset, and checkpoints! 🎉🎉 |

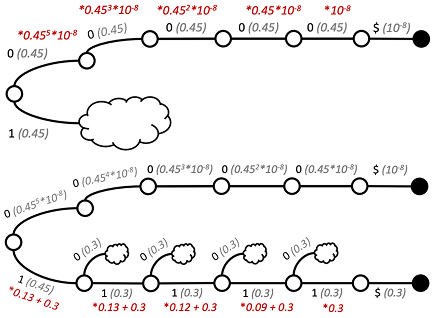

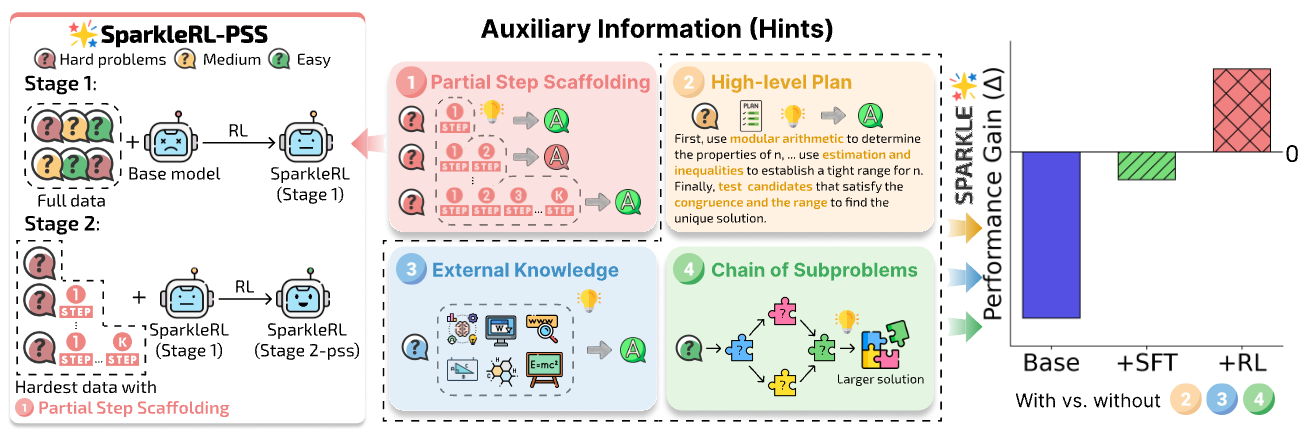

| Jun 5, 2025 | 🚀 SPARKLE preprint is now live on arXiv! Reinforcement learning has driven impressive gains in LLM reasoning—but what exactly does RL improve? SPARKLE answers this question with a fine-grained evaluation framework that dissects reasoning into plan-following, problem decomposition, and knowledge use. The results are surprising: explicit plans can actually hurt on the hardest problems, yet RL-tuned models remain far more robust and flexible in handling them. We also find clear gains in how RL enhances knowledge integration. And we push back on a common myth: hard problems can be useful for RL—even when they seem unrewarding. SPARKLE shows how to turn those tough cases into real training signal. |

| Apr 30, 2025 | 🚀 COSMOS preprint is now available on arXiv! With training-time and test-time adaptation strategies for LLMs exploding in number, figuring out the best one can feel like a wild goose chase. COSMOS makes it easy — predicting performance and cost accurately and efficiently so you don’t have to burn GPU hours testing every option. Smarter choices, fewer experiments. |

selected publications (*equal contribution)

2026

2025

-

LiveResearchBench: A Live Benchmark for User-Centric Deep Research in the WildIn Proceedings of the 14th International Conference on Learning Representations (ICLR 2026), Oct 2025

LiveResearchBench: A Live Benchmark for User-Centric Deep Research in the WildIn Proceedings of the 14th International Conference on Learning Representations (ICLR 2026), Oct 2025 -

Beyond Accuracy: Dissecting Mathematical Reasoning for LLMs Under Reinforcement LearningIn Proceedings of the 39th Conference on Neural Information Processing Systems (NeurIPS 2025), Jun 2025

Beyond Accuracy: Dissecting Mathematical Reasoning for LLMs Under Reinforcement LearningIn Proceedings of the 39th Conference on Neural Information Processing Systems (NeurIPS 2025), Jun 2025 -

2024

-

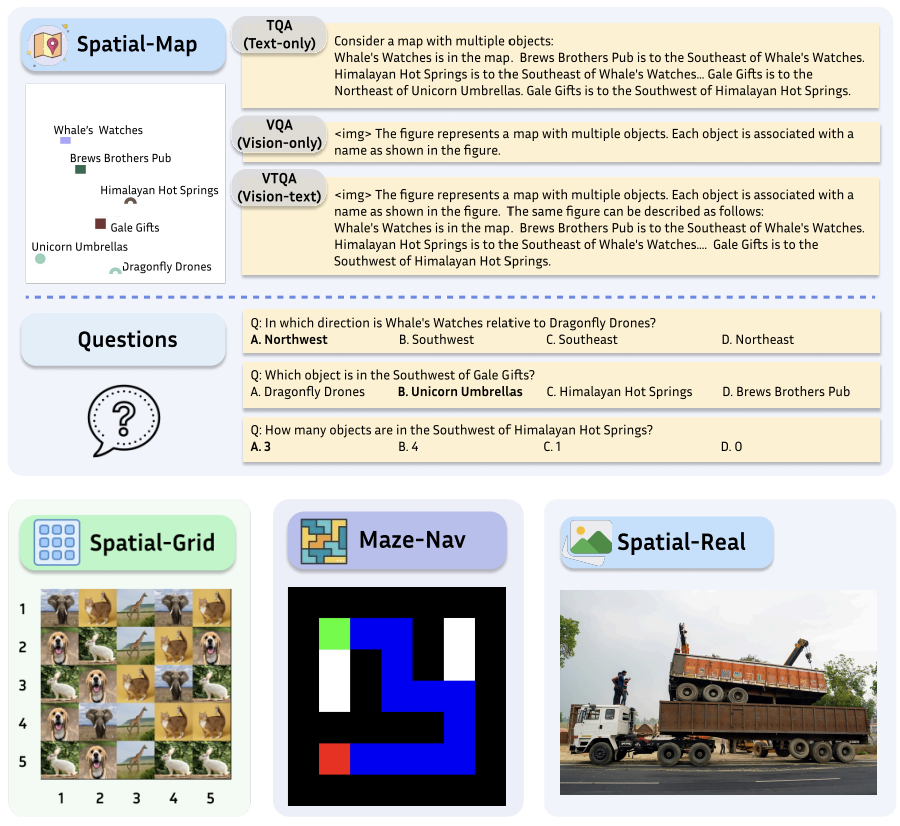

Is A Picture Worth A Thousand Words? Delving Into Spatial Reasoning for Vision Language ModelsIn Proceedings of the 38th Annual Conference on Neural Information Processing Systems (NeurIPS 2024), Jun 2024

Is A Picture Worth A Thousand Words? Delving Into Spatial Reasoning for Vision Language ModelsIn Proceedings of the 38th Annual Conference on Neural Information Processing Systems (NeurIPS 2024), Jun 2024